MIT researchers have developed camera technology to recover a video of the motion taking place in a hidden scene by observing changes in indirect illumination in a nearby un-calibrated visible region. This problem is solved by factoring the observed video into a matrix product between the unknown hidden scene video and an unknown light transport matrix, or in other words, shadows.

The project was inspired by recent work on the Deep Image Prior, where factor matrices are parametrized using randomly initialized convolutional neural networks trained in a one-off manner. This results in decompositions that reflect the true motion in the hidden scene. Put simply,

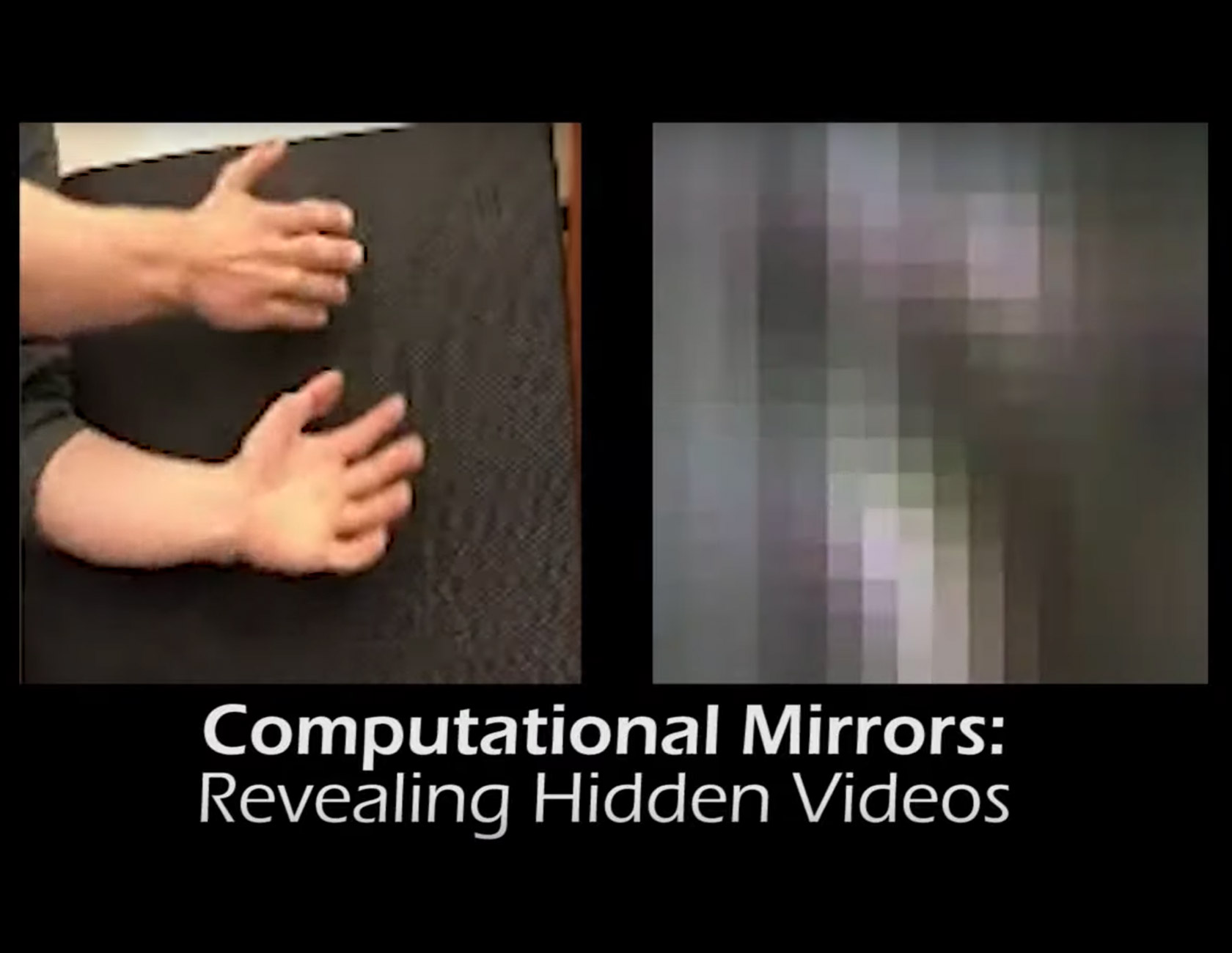

the researchers filmed a pile of scrap, and off-screen, someone created shadows by moving blocks and other objects. The algorithm then predicted the light transport, compared that to the shadows, and then used that data to reconstruct the off-screen video.